Hypervisor

A hypervisor or virtual machine monitor (VMM) is a piece of computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Multiple instances of a variety of operating systems may share the virtualized hardware resources: for example, Linux, Windows, and OS X instances can all run on a single physical x86 machine. This contrasts with operating-system-level virtualization, where all instances (usually called containers) must share a single kernel, though the guest operating systems can differ in user space, such as different Linux distributions with the same kernel.

The term hypervisor is a variant of supervisor, a traditional term for the kernel of an operating system: the hypervisor is the supervisor of the supervisor,[1] with hyper- used as a stronger variant of super-.[lower-alpha 1] The term dates to circa 1970;[2] in the earlier CP/CMS (1967) system the term Control Program was used instead.

Classification

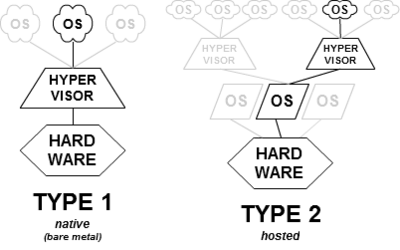

In their 1974 article, Formal Requirements for Virtualizable Third Generation Architectures, Gerald J. Popek and Robert P. Goldberg classified two types of hypervisor:[3]

- Type-1, native or bare-metal hypervisors

- These hypervisors run directly on the host's hardware to control the hardware and to manage guest operating systems. For this reason, they are sometimes called bare metal hypervisors. The first hypervisors, which IBM developed in the 1960s, were native hypervisors.[4] These included the test software SIMMON and the CP/CMS operating system (the predecessor of IBM's z/VM). Modern equivalents include Xen, Oracle VM Server for SPARC, Oracle VM Server for x86, the Citrix XenServer, Microsoft Hyper-V and VMware ESX/ESXi.

- Type-2 or hosted hypervisors

- These hypervisors run on a conventional operating system just as other computer programs do. A guest operating system runs as a process on the host. Type-2 hypervisors abstract guest operating systems from the host operating system. VMware Workstation, VMware Player, VirtualBox, Parallels Desktop for Mac and QEMU are examples of type-2 hypervisors.

However, the distinction between these two types is not necessarily clear. Linux's Kernel-based Virtual Machine (KVM) and FreeBSD's bhyve are kernel modules[5] that effectively convert the host operating system to a type-1 hypervisor.[6] At the same time, since Linux distributions and FreeBSD are still general-purpose operating systems, with other applications competing for VM resources, KVM and bhyve can also be categorized as type-2 hypervisors.[7]

Mainframe origins

The first hypervisors providing full virtualization were the test tool SIMMON and IBM's one-off research CP-40 system, which began production use in January 1967, and became the first version of IBM's CP/CMS operating system. CP-40 ran on a S/360-40 that was modified at the IBM Cambridge Scientific Center to support Dynamic Address Translation, a key feature that allowed virtualization. Prior to this time, computer hardware had only been virtualized enough to allow multiple user applications to run concurrently (see CTSS and IBM M44/44X). With CP-40, the hardware's supervisor state was virtualized as well, allowing multiple operating systems to run concurrently in separate virtual machine contexts.

Programmers soon re-implemented CP-40 (as CP-67) for the IBM System/360-67, the first production computer-system capable of full virtualization. IBM first shipped this machine in 1966; it included page-translation-table hardware for virtual memory, and other techniques that allowed a full virtualization of all kernel tasks, including I/O and interrupt handling. (Note that its "official" operating system, the ill-fated TSS/360, did not employ full virtualization.) Both CP-40 and CP-67 began production use in 1967. CP/CMS was available to IBM customers from 1968 to early 1970s, in source code form without support.

CP/CMS formed part of IBM's attempt to build robust time-sharing systems for its mainframe computers. By running multiple operating systems concurrently, the hypervisor increased system robustness and stability: Even if one operating system crashed, the others would continue working without interruption. Indeed, this even allowed beta or experimental versions of operating systems—

IBM announced its System/370 series in 1970 without any virtualization features, but added virtual memory support in the August 1972 Advanced Function announcement. Virtualization has been featured in all successor systems (all modern-day IBM mainframes, such as the zSeries line, retain backward compatibility with the 1960s-era IBM S/360 line) The 1972 announcement also included VM/370, a reimplementation of CP/CMS for the S/370. Unlike CP/CMS, IBM provided support for this version (though it was still distributed in source code form for several releases). VM stands for Virtual Machine, emphasizing that all, and not just some, of the hardware interfaces are virtualized. Both VM and CP/CMS enjoyed early acceptance and rapid development by universities, corporate users, and time-sharing vendors, as well as within IBM. Users played an active role in ongoing development, anticipating trends seen in modern open source projects. However, in a series of disputed and bitter battles, time-sharing lost out to batch processing through IBM political infighting, and VM remained IBM's "other" mainframe operating system for decades, losing to MVS. It enjoyed a resurgence of popularity and support from 2000 as the z/VM product, for example as the platform for Linux for zSeries.

As mentioned above, the VM control program includes a hypervisor-call handler that intercepts DIAG ("Diagnose") instructions used within a virtual machine. This provides fast-path non-virtualized execution of file-system access and other operations (DIAG is a model-dependent privileged instruction, not used in normal programming, and thus is not virtualized. It is therefore available for use as a signal to the "host" operating system). When first implemented in CP/CMS release 3.1, this use of DIAG provided an operating system interface that was analogous to the System/360 Supervisor Call instruction (SVC), but that did not require altering or extending the system's virtualization of SVC.

In 1985 IBM introduced the PR/SM hypervisor to manage logical partitions (LPAR).

Operating system support

Several factors led to a resurgence around 2005 in the use of virtualization technology among Unix, Linux, and other Unix-like operating systems:[9]

- Expanding hardware capabilities, allowing each single machine to do more simultaneous work

- Efforts to control costs and to simplify management through consolidation of servers

- The need to control large multiprocessor and cluster installations, for example in server farms and render farms

- The improved security, reliability, and device independence possible from hypervisor architectures

- The ability to run complex, OS-dependent applications in different hardware or OS environments

Major Unix vendors, including Sun Microsystems, HP, IBM, and SGI, have been selling virtualized hardware since before 2000. These have generally been large, expensive systems (in the multimillion-dollar range at the high end), although virtualization has also been available on some low- and mid-range systems, such as IBM's pSeries servers, Sun/Oracle's T-series CoolThreads servers and HP Superdome series machines.

Although Solaris has always been the only guest domain OS officially supported by Sun/Oracle on their Logical Domains hypervisor, as of late 2006, Linux (Ubuntu and Gentoo), and FreeBSD have been ported to run on top of the hypervisor (and can all run simultaneously on the same processor, as fully virtualized independent guest OSes). Wind River "Carrier Grade Linux" also runs on Sun's Hypervisor.[10] Full virtualization on SPARC processors proved straightforward: since its inception in the mid-1980s Sun deliberately kept the SPARC architecture clean of artifacts that would have impeded virtualization. (Compare with virtualization on x86 processors below.)[11]

HP calls its technology to host multiple OS technology on its Itanium powered systems "Integrity Virtual Machines" (Integrity VM). Itanium can run HP-UX, Linux, Windows and OpenVMS. Except for OpenVMS, to be supported in a later release, these environments are also supported as virtual servers on HP's Integrity VM platform. The HP-UX operating system hosts the Integrity VM hypervisor layer that allows for many important features of HP-UX to be taken advantage of and provides major differentiation between this platform and other commodity platforms - such as processor hotswap, memory hotswap, and dynamic kernel updates without system reboot. While it heavily leverages HP-UX, the Integrity VM hypervisor is really a hybrid that runs on bare-metal while guests are executing. Running normal HP-UX applications on an Integrity VM host is heavily discouraged, because Integrity VM implements its own memory management, scheduling and I/O policies that are tuned for virtual machines and are not as effective for normal applications. HP also provides more rigid partitioning of their Integrity and HP9000 systems by way of VPAR and nPar technology, the former offering shared resource partitioning and the latter offering complete I/O and processing isolation. The flexibility of virtual server environment (VSE) has given way to its use more frequently in newer deployments.

IBM provides virtualization partition technology known as logical partitioning (LPAR) on System/390, zSeries, pSeries and iSeries systems. For IBM's Power Systems, the Power Hypervisor (PowerVM) functions as a native (bare-metal) hypervisor in firmware and provides isolation between LPARs. Processor capacity is provided to LPARs in either a dedicated fashion or on an entitlement basis where unused capacity is harvested and can be re-allocated to busy workloads. Groups of LPARs can have their processor capacity managed as if they were in a "pool" - IBM refers to this capability as Multiple Shared-Processor Pools (MSPPs) and implements it in servers with the POWER6 processor. LPAR and MSPP capacity allocations can be dynamically changed. Memory is allocated to each LPAR (at LPAR initiation or dynamically) and is address-controlled by the POWER Hypervisor. For real-mode addressing by operating systems (AIX, Linux, IBM i), the POWER processors (POWER4 onwards) have designed virtualization capabilities where a hardware address-offset is evaluated with the OS address-offset to arrive at the physical memory address. Input/Output (I/O) adapters can be exclusively "owned" by LPARs or shared by LPARs through an appliance partition known as the Virtual I/O Server (VIOS). The Power Hypervisor provides for high levels of reliability, availability and serviceability (RAS) by facilitating hot add/replace of many parts (model dependent: processors, memory, I/O adapters, blowers, power units, disks, system controllers, etc.)

Similar trends have occurred with x86/x86-64 server platforms, where open-source projects such as Xen have led virtualization efforts. These include hypervisors built on Linux and Solaris kernels as well as custom kernels. Since these technologies span from large systems down to desktops, they are described in the next section.

x86 systems

Starting in 2005, CPU vendors have added hardware virtualization assistance to their products, for example: Intel VT-x (codenamed Vanderpool) and AMD-V (codenamed Pacifica).

An alternative approach requires modifying the guest operating-system to make system calls to the hypervisor, rather than executing machine I/O instructions that the hypervisor simulates. This is called paravirtualization in Xen, a "hypercall" in Parallels Workstation, and a "DIAGNOSE code" in IBM's VM. All are really the same thing, a system call to the hypervisor below. Some microkernels such as Mach and L4 are flexible enough such that "paravirtualization" of guest operating systems is possible.

Embedded systems

Embedded hypervisors, targeting embedded systems and certain real-time operating system (RTOS) environments, are designed with different requirements when compared to desktop and enterprise systems, including robustness, security and real-time capabilities. The resource-constrained nature of many embedded systems, especially battery-powered mobile systems, imposes a further requirement for small memory-size and low overhead. Finally, in contrast to the ubiquity of the x86 architecture in the PC world, the embedded world uses a wider variety of architectures and less standardized environments. Support for virtualization requires memory protection (in the form of a memory management unit or at least a memory protection unit) and a distinction between user mode and privileged mode, which rules out most microcontrollers. This still leaves x86, MIPS, ARM and PowerPC as widely deployed architectures on medium- to high-end embedded systems.[12]

As manufacturers of embedded systems usually have the source code to their operating systems, they have less need for full virtualization in this space. Instead, the performance advantages of paravirtualization make this usually the virtualization technology of choice. Nevertheless, ARM and MIPS have recently added full virtualization support as an IP option and has included it in their latest high-end processors and architecture versions, such as ARM Cortex-A15 MPCore and ARMv8 EL2.

Other differences between virtualization in server/desktop and embedded environments include requirements for efficient sharing of resources across virtual machines, high-bandwidth, low-latency inter-VM communication, a global view of scheduling and power management, and fine-grained control of information flows.[13]

Security implications

The use of hypervisor technology by malware and rootkits installing themselves as a hypervisor below the operating system, known as hyperjacking, can make them more difficult to detect because the malware could intercept any operations of the operating system (such as someone entering a password) without the anti-malware software necessarily detecting it (since the malware runs below the entire operating system). Implementation of the concept has allegedly occurred in the SubVirt laboratory rootkit (developed jointly by Microsoft and University of Michigan researchers[14]) as well as in the Blue Pill malware package. However, such assertions have been disputed by others who claim that it would be possible to detect the presence of a hypervisor-based rootkit.[15]

In 2009, researchers from Microsoft and North Carolina State University demonstrated a hypervisor-layer anti-rootkit called Hooksafe that can provide generic protection against kernel-mode rootkits.[16]

Notes

References

- ↑ Bernard Golden (2011). Virtualization For Dummies. p. 54.

- ↑ "How did the term "hypervisor" come into use?".

- ↑ Popek, Gerald J.; Goldberg, Robert P. (1974). "Formal requirements for virtualizable third generation architectures". Communications of the ACM. 17 (7): 412–421. doi:10.1145/361011.361073. Retrieved 2015-03-01.

- ↑ Meier, Shannon (2008). "IBM Systems Virtualization: Servers, Storage, and Software" (PDF). pp. 2, 15, 20. Retrieved 2015-12-22.

- ↑ Dexter, Michael. "Hands-on bhyve". CallForTesting.org. Retrieved 2013-09-24.

- ↑ Graziano, Charles (2011). "A performance analysis of Xen and KVM hypervisors for hosting the Xen Worlds Project". Graduate Theses and Dissertations. Iowa State University. Retrieved 2013-01-29.

- ↑ Pariseau, Beth (15 April 2011). "KVM reignites Type 1 vs. Type 2 hypervisor debate". SearchServerVirtualization. TechTarget. Retrieved 2013-01-29.

- ↑ See History of CP/CMS for virtual-hardware simulation in the development of the System/370

- ↑ Loftus, Jack (19 December 2005). "Xen virtualization quickly becoming open source 'killer app'". TechTarget. Retrieved 26 October 2015.

- ↑ "Wind River To Support Sun's Breakthrough UltraSPARC T1 Multithreaded Next-Generation Processor". Wind River Newsroom (Press release). Alameda, California. 1 November 2006. Retrieved 26 October 2015.

- ↑ Fritsch, Lothar; Husseiki, Rani; Alkassar, Ammar. Complementary and Alternative Technologies to Trusted Computing (TC-Erg./-A.), Part 1, A study on behalf of the German Federal Office for Information Security (BSI) (PDF) (Report).

- ↑ Strobl, Marius (2013). Virtualization for Reliable Embedded Systems. Munich: GRIN Publishing GmbH. pp. 5–6. ISBN 978-3-656-49071-5. Retrieved 2015-03-07.

- ↑ Gernot Heiser (April 2008). "The role of virtualization in embedded systems". Proc. 1st Workshop on Isolation and Integration in Embedded Systems (IIES'08). pp. 11–16.

- ↑ "SubVirt: Implementing malware with virtual machines" (PDF). University of Michigan, Microsoft. 2006-04-03. Retrieved 2008-09-15.

- ↑ "Debunking Blue Pill myth". Virtualization.info. Retrieved 2010-12-10.

- ↑ Wang, Zhi; Jiang, Xuxian; Cui, Weidong; Ning, Peng (11 August 2009). "Countering Kernel Rootkits with Lightweight Hook Protection" (PDF). Proceedings of the 16th ACM Conference on Computer and Communications Security. CCS '09. Chicago, Illinois, USA: ACM. doi:10.1145/1653662.1653728. ISBN 978-1-60558-894-0. Retrieved 2009-11-11.

External links

| Wikimedia Commons has media related to Hypervisor. |

- Hypervisors and Virtual Machines: Implementation Insights on the x86 Architecture

- A Performance Comparison of Hypervisors, VMware